INTRODUCTION

Peer feedback is increasingly acknowledged as an effective means for facilitating learning (Dochy et al., 1999; Nicol et al., 2014). Research suggests that in order for students to learn from feedback, it is vital that they engage with it through asking questions, discussing the feedback, and constructing their own meaning from it (Black et al., 2004; Ellegaard et al., 2018; Doyle et al., 2019; Winstone et al., 2021). At the same time, research emphasizes that for feedback to be effective, students need to use it in new situations consciously. In this regard, peer feedback has great potential, as the active role of students, which is implicit in peer feedback, can be extended to their involvement in e.g., formulating criteria, and enhanced use of both criteria and feedback. However, whether this is achieved depends on how and why peer feedback is implemented. To this end, Gielen et al. (2011) amassed an overview of the diversity of peer assessment and, building on the work of Topping (1998), formulated a list of variables that characterize this diversity. Moving from pedagogical and didactical theory to practice can be a challenging step, and models based on practical examples can help bridge this gap (e.g., May et al., 2016). Since there are relatively few cases in the literature where detailed discussions of specific examples include contextualized peer feedback design principles, our aim is to facilitate the bridging of this gap through analysis of empirical examples of peer feedback in different settings. To address this aim, we, first, formulate a set of themes and associated questions that should be addressed when implementing peer feedback. Second, we investigate, compare and analyze six practical peer feedback examples based on the formulated questions.

Peer feedback is frequently implemented as a response to a particular educational challenge or problem, and this is often framed as reasons for or benefits of peer feedback (Cate & Durning, 2009). Furthermore, good feedback should provide opportunities to close the gap between current and desired performance (Lizzio & Wilson, 2008; Nicol & MacFarlane, 2006; Sadler, 1989).

However, a central thing to be aware of in connection with implementing peer feedback, is that the roles of the teacher, the student, and the assignment/product change. We find it meaningful to address these shifts in terms of renegotiations of the didactic contract (Brousseau, 1997). The didactic contract can be seen as an “implicit contract that concerns reciprocal and specific expectations with regard to the element of knowledge that is taught and learned” (Verscheure & Amade-Escot, 2007 p. 248, lines 6–8). Being implicit, the didactic contract is neither written out nor formalized, but it does involve negotiations of expectations between students and teacher. One can talk about a standard version of the contract, and in this version, the role of the student is to submit an assignment, while the role of the teacher is to assess the assignment. In connection with peer feedback, this contract needs to be renegotiated, because the roles need to change. The role of the teacher changes from being the assessor to providing the framework for assessment. The teacher’s role during peer feedback can be negotiated to become that of a pure observer, a referee (who judges whether feedback is acceptable), the final assessor, or somewhere in between. The students’ roles during peer feedback are to become not only receivers of feedback but also assessors. In addition, providing ongoing opportunity to develop feedback skills can lead to higher quality feedback and more engagement among students on the objects of feedback (Hvass & Heger, 2017; Zhu, 1995). In our analysis of the six examples, we illustrate how those changes in the didactic contract can take place in practice.

An evaluation process will always have standards for what constitutes a “good” response to a given assignment. These constitute the criteria for the assignment (or test, task). Often, however, these will be implicit: the teacher or examiner knows what he/she is looking for yet has not formulated it explicitly (e.g., Jensen et al., 2020). In the process of implementing peer feedback, a crucial step will be how to communicate, share or even co-develop the criteria with students. The students must understand the criteria in a way that aligns with their learning goals. The formulation of criteria thus represents a deliberate choice of what to highlight as important in the course/assignment as well as serves as a powerful way to focus the students’ attention.

The six examples illustrate how different ways of implementing peer feedback can address these issues.

METHOD

At two 2-day workshops in a Nordic network collaboration in 2018 and 2019, we presented and discussed nine of our teaching practices that included student peer feedback, all of which are used at one of six different universities in three Nordic countries. First, the focus was on sharing our knowledge and experiences with student peer feedback in practice. Based on this, six of these specific examples of were selected for further analysis (see Table 1 and SI 1); these examples span different educational contexts, aims, and forms of peer feedback.

Table 1. A short description of each of the six examples concerning which university and discipline the peer feedback practice addresses, as well as the framework information and the short name used in the rest of the text

| Short name | University | Level | Approx. size | Discipline |

|---|---|---|---|---|

| “Didactics” | UCPH | Undergraduate | 100 | Science Education |

| “Physics” | JYU | Undergraduate | 70 | Physics |

| “Teachers” | UCPH | Postgraduate | 25 | University Pedagogy |

| “Microbiology” | UCPH | Undergraduate | 200 | Biology |

| “Urban Development” | SDU | Undergraduate | 80 | Engineering |

| “Science projects” | RUC | Undergraduate | 100 | Math + Natural Sciences |

Second, we focused on the challenges we encountered during the didactic development and implementation of the feedback Teaching and Learning Activities (TLA). By looking for common elements across the examples, we identified four themes that we found relevant for discussing the implementation of these examples. We then split into groups to develop each theme into questions that we found central to ask about peer feedback, in all the settings. In total we formulated 14 questions. We then used these questions to investigate, compare, and analyze the six examples.

RESULTS

We identified the following themes that should be covered when planning and implementing peer feedback: (A) Framework and context, (B) Purpose, (C) Criteria, and (D) Support and embedding (Table 2). The four themes and the associated questions shown in Table 2 address challenges encountered during the development of the feedback practices and identified common elements that affect how the feedback practices play out.

Next, we investigate, compare, and analyze the six practical examples of peer feedback (see Table 1 and detailed descriptions in the Supplementary Information [SI 1]) based on these themes and associated questions.

SYNTHESIS AND COMPARISON OF SIX EXAMPLES OF PEER FEEDBACK

A. Framework and context

What is the educational level and context?

The educational setting of our examples varied. In most cases, the setting is a course with ca. 25 (Teachers) to 200 students (Microbiology), but in others (Science projects), the setting is project work done in groups of 3–8 students. In some cases, the students work in groups (for example Urban development and Microbiology), in others, they worked individually (Physics). Furthermore, the student population may be homogeneous, e.g., only biology students (Microbiology), or more heterogeneous, e.g., from different natural science fields (Science projects, Didactics). The object of peer feedback is typically a product (or task) produced by the person(s) receiving the feedback. The objects on which the feedback is given are technical drawings (Urban development), written solutions to quantitative physics problems (Physics), essays (Microbiology), a description of a teaching intervention (Teachers) or midterm/final reports (Science projects). In some cases, the feedback is anonymous, and in some cases, it is not. Thus, these examples encompass a variety of the diversity of peer feedback (Gielen et al., 2011), which allowed for a rich discussion of the effects of different choices in setting up and carrying out peer feedback. In some cases, the peer feedback process is voluntary (Urban development, Microbiology), and in others, it is a mandatory part of the course work (Didactics, Physics). The implications of these, and other choices, are discussed below.

Table 2. The 14 questions (within the four themes) that we answered for each of the six different feedback examples and that were used to discuss how general principles for peer feedback play out in practice

| Theme | Questions |

|---|---|

| Framework and context | Describe the educational level and the context |

| What is the setup for the peer feedback process? (timeline, artifacts, location, technical support systems, anonymous/non-anonymous, group/individual, oral/written, mandatory/voluntary) | |

| Description of products that form the basis for the feedback | |

| How many times will students provide peer feedback on the same or successive products? | |

| Purpose | Which educational problem or problems does the peer feedback practice address? |

| What are the intended learning objectives? | |

| To which product or practice does the feedback point? | |

| Criteria for providing feedback | What are the criteria which the students are to use when giving feedback? |

| By which process are criteria developed? | |

| How are students involved in interpreting/developing the criteria? | |

| How are the criteria presented to students? | |

| Support and embedding | Which initiatives are taken to support the students in giving useful peer feedback? |

| Which initiatives are taken to support the students in using/reflecting the received feedback from the viewpoint of the intended learning goals? | |

| How is feedback embedded in the teaching and learning? |

What kind of products form the basis for the peer feedback? What is the setup for the peer feedback process?

In the two cases of large courses (Physics, Microbiology), feedback was given on written products using an electronic platform. This facilitates that feedback givers can access the products to be reviewed and peers may contribute their feedback by automatizing the process of collecting and distributing the relevant products in the peer feedback activity. The development of such platforms is to a large extent driving the implementation of large-scale peer feedback, as they can e.g., ensure that large quantities of files do not reach the email inbox of the teacher. In other examples, the feedback is given orally (Science projects) or as a combination of written and oral (Urban development; Didactics; Teachers). In the case of oral feedback, the design of the physical learning environment and whether it is conducive to active, cooperative learning can be important (Cornell, 2002). Extra physical rooms may also be required so that noise levels become acceptable. In all cases, the feedback process was supported by the criteria; however, the type of criteria and how they were attained varied greatly (see section C).

How many times will students provide peer feedback on the same or successive products?

As seen from the examples, it is possible to use peer feedback both as a single occurrence and as a continuous, repeated practice. For practices using peer feedback only once (e.g., Microbiology), ensuring the use of feedback is a particular challenge, as other studies indicate that the learning potential of feedback is only fully realized when the students engage with the feedback (see discussion). In the other examples, the feedback occurs multiple times for one product (the Urban development course) or for sequential products (the Didactics course) – this also relates to the horizon of the feedback (see section B) and has implications for training aspects (see section D).

B. Purpose

Which educational problem or problems does the peer feedback practice address?

The reasons and benefits for peer feedback constitute the overall educational rationale of the teacher (or of the system) behind implementing peer feedback in a particular educational setting.

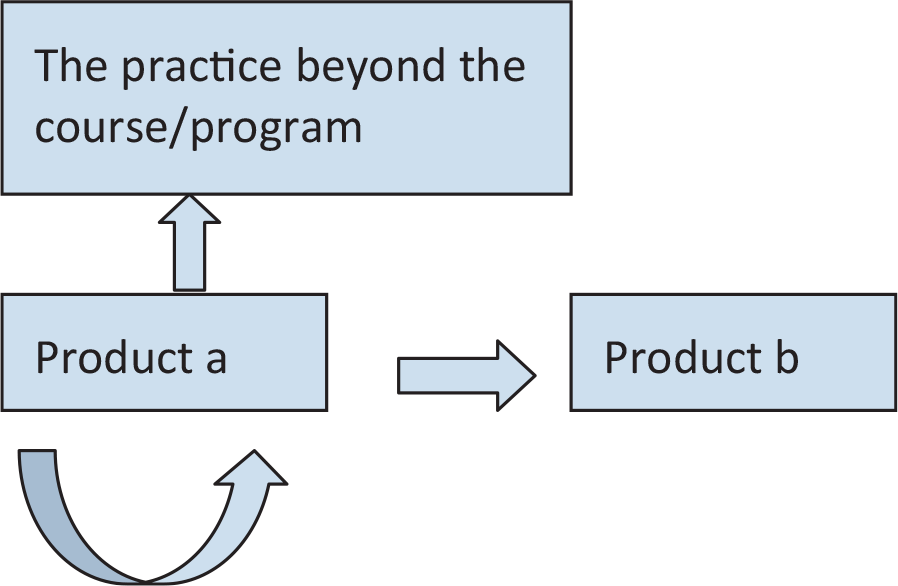

In the Physics example, implementing peer feedback was one part of a suite of novel implementations in basic-level physics courses (see details in Koskinen et al., 2018). An educational rationale behind introducing peer feedback was to have students more engaged in physics learning and integrated into the academic community (thus supporting their motivational beliefs; see Nicol & MacFarlane, 2006). In the case of the Urban development course, the teachers wanted the student groups to get feedback on their technical drawings, which is not possible for the teachers due to the large class size, and therefore peer feedback was implemented. Another objective, with a longer horizon (see also Figure 1), was to prepare the students for the workflow in their future professions, in which peer feedback is an integral component. In the Didactics course, one of the reasons for implementing peer feedback was to support the students, who are planning to become teachers, in learning to give, and reflect on giving, formative feedback.

What are the intended learning objectives?

In addition to the educational rationale, there is usually a specific, local, instructional purpose (the intended learning objective or ILO) of using peer feedback.

We identified three main categories of learning goals in the six examples:

- Content oriented (factual knowledge, conceptual knowledge)

- Structure/process/method oriented (procedural knowledge)

- How to give feedback and/or describe reasoning and how to move forward (meta-cognition)

In many of the examples, clarifying these also helped towards formulating specific criteria (see section C).

In the Urban development course, for example, one of the learning goals is to develop skills for producing technical drawings (which is, in this case, identical to the educational rationale behind introducing peer feedback). In addition, there are objectives in the metacognitive genre, such as learning to critically reflect on ways to apply their knowledge and thus learn to give and use formative feedback. Finally, there is also a more affective objective of learning to value the direct benefit from attending to feedback. While these learning objectives are not directly identical to the educational rationale of preparing students for the workflow characteristic of their future professions, they can still be seen as a way of elaborating this educational rationale.

In the Physics example, the ILOs are about content and about learning to how to learn the content. The students work on the same set of problems (a new set provided each week) and give each other feedback on the applied solution processes and suggestions for alternative solution processes. By receiving feedback, the student learns about different approaches to solve the same problem, which can be seen as both content knowledge and procedural knowledge. By giving feedback, the students critically evaluate both others’ and their own approaches to solve problems. In this way, one of the educational rationales is furthered, namely, improving student engagement in physics learning, and, in the process, delivering high quality information about learning (in the sense of Nicol & MacFarlane, 2006).

In the Teachers example, the main goal is to strengthen the participants’ abilities to critically engage with their own learning. In the course, one of the ILOs is to stimulate the new teachers to engage in reflective practices involving documenting teaching practice and teaching development efforts, i.e., scholarship of teaching and learning as described by Shulman (1993).

In Science projects, the focus is on students’ learning to develop procedural knowledge. Student groups give feedback to other students’ reports with a focus on the process of project work; the students typically work in different fields, so they are not required to give detailed, in-depth content-oriented feedback. Rather, the intention is that they learn to give, receive, and use feedback (i.e. engage critically with own learning in the sense of Nicol & MacFarlane, 2006) as well as perform valuable project work processes (procedural knowledge). Hence, the feedback is often more general, which is challenging for the students since they are sometimes preoccupied with the details (commas and the like).

To which product or practice does the feedback point?

Figure 1 illustrates different possible focus aspects of peer feedback: in one use of feedback, the feedback points to an improvement of the product for which it was provided (Product a; as in Microbiology and Science projects). In another use, the feedback points to a new product (Product b, which may be slightly different from the first; as in Didactics and Physics). Finally, the feedback could point out of the course, for example, to a professional practice or to general study competencies (as in, e.g., Teachers and Urban development).

Figure 1. A graphic representation of the different focus horizons that the peer feedback aims at in the six examples

As perspectives of both students and teachers are essential here, an interesting challenge in relation to using horizons in feedback is to notice if feedback is actually being used to inform practices that lie at the desired horizons. In the Urban development example, students are expected to use checklists in the next course, but some students only saw local relevance for this particular exercise and did not discover check-listing as a generically useful practice in planning engineering designs.

Sometimes, particularly when the feedback points at multiple horizons, it can be difficult for the students to distinguish these and therefore to use the feedback optimally. An example is the Didactics course, where the aims can be content directed (intended to deepen learning relative to the ILOs); the horizon here is the exam (to focus the preparations for the oral exam) as well as professional settings after education (to understand the topics better). It can also be structural- or process oriented; the feedback is then aimed at improving the following themes and reports. Here, the feedback thus points both to a later version of Product a (that is used for the exam) and later products (Product b’s), and sometimes to teaching practices (practice beyond the course). It does not always seem to be clear to the students that there are these different horizons, and in Didactics it does not always seem that teacher and student have the same perception of the horizon. In the next section, we discuss how to communicate the horizons and aims to the students via the criteria, or via the follow-up.

C. Criteria

What are the criteria which the students are to use when giving feedback? By which process are criteria developed? How are the criteria presented to students?

In our examples, we have used different ways of formulating criteria and communicating them to students, and we present the implications of each method below.

1. Rubrics with achievement levels to guide students’ feedback

A rubric with achievement levels (shown in SI 2) was used in Didactics (and a similar one in Physics, see Koskinen & Lämsä, 2019). The achievement levels rubric offers a taxonomy of the degree to which an answer to an assignment meets the teacher’s intentions. A disadvantage of this rubric can be that it may lead to students providing summative feedback rather than formative or reducing the feedback to a “check-list” activity.

2. Rubrics with focus-areas and keywords for each, developed by testimonies from stakeholders

In the Microbiology example, seven generic, and two topic-specific, questions, each with key words for the content, were developed for each assignment (Jensen et al., 2020). The questions were developed from interviews with relevant stakeholders about what the intention and purpose of the assignment is and how it can be solved (reported in Jensen et al., 2020).

3. Open text describing what is expected/desired from the assignments (also from Didactics)

Quotation from the rubric:

The purpose of question 1 is to exemplify/illustrate the external didactic transposition and to make it clear that a subject is constituted by “someone” and is therefore open to debate. At the same time, the thesis must show that the authors can use the four categories for justifying subjects and can give well-founded arguments for their categorization and choices.

This type of rubric gives a more open view than example 1 on the aims of the assignment as well as illustrates the fuzziness of assessment criteria. It introduces wording used in the taxonomic rubric (as in example 1 above) while also including the students more explicitly as interpreters of intentions.

4. Open format: The students were instructed to: “Give formative feedback to support the authors in attaining the learning goals for the assignment.” This was used in the Didactics course for students with experience both in giving feedback, and of formative evaluation. Open format delivers the responsibility of interpreting the ILOs, and relating to the intentions of the authors, into the hands of the student. In this way, the students become central players in clarifying what constitutes good performance. The aim in this case was specifically to allow the students to train giving formative feedback.

5. Self-assessment: In the Teachers course, where assignments are related to the participant’s individual teaching practice, the criteria will also often ask assessors to relate the product to their own teaching practices.

An important point is that the final test of the criteria lies in their use – do the students respond as desired? This can be assessed when there is some way for the teacher to engage with the peer feedback, which will be discussed in the next section.

How are the students involved in interpreting and/or developing criteria?

Ensuring that the students understand and are able to use the criteria is essential to attaining the goals. This can partly be achieved by monitoring, e.g., by the students using the option to flag feedback (which many peer feedback platforms include) to invite a teacher into the feedback process.

However, strategies that involve the students explicitly in interpreting, and in some cases also developing and formulating, the criteria, can be both more effective and better aligned with the aim of increasing the students’ autonomy. In order to secure ownership of learning and to support the students in giving and receiving peer feedback, it may thus be relevant to take student voice into account (see also Hämäläinen et al., 2017). In other words, let the students participate in formulating peer feedback criteria together with teachers or supervisors.

In Urban development the students formulate quality criteria for a technical drawing in a plenary session based on a “good” and a “bad” example of such a drawing. Here, part of the learning process is that the students themselves formulate criteria. The students then apply the criteria in their assessment of other groups’ products and in a near-final poster session.

In Didactics, the development of criteria over time has led to a process where the students give suggestions for revising and specifying the criteria that have been developed by the teaching team, and which they have used in the first feedback session. In this manner, alignment between the teachers’ and students’ understanding of the criteria is supported.

The question of involving students in the development of criteria pertains both to students’ critical engagement with their own learning and to the ILOs. In addition, students need to learn how to both provide and receive feedback. How to do this is discussed below in section D on support and embedding.

D. Support and embedding

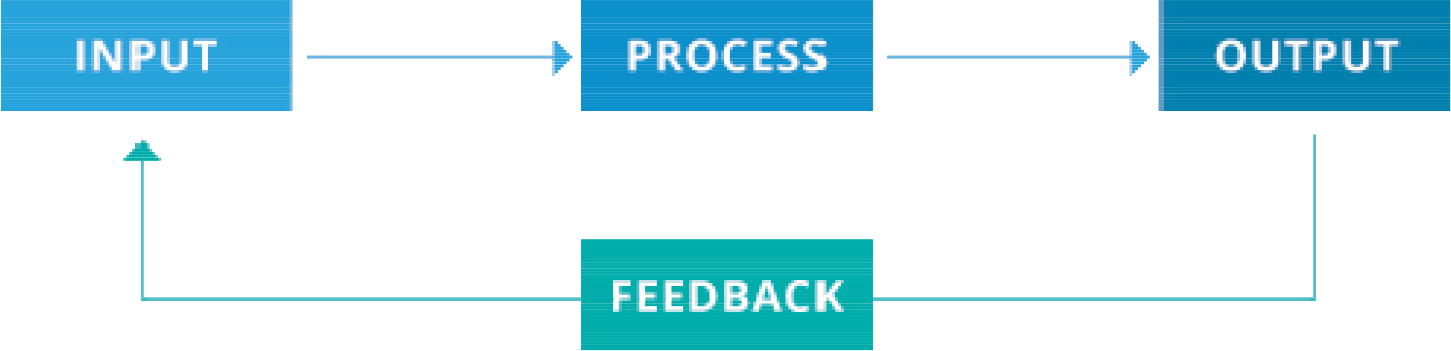

Feedback activities need to be credibly embedded within the TLA to have the desired impact on such activities. One aspect is to support students in understanding the purpose of the peer feedback activities, and of ensuring sufficient quality and validity of the feedback provided. Another aspect is to ensure that space and time are made for providing, making use of, reflecting on and returning to feedback when appropriate. This also implies that the feedback loop is closed (see Figure 2) so that the students are aware of, and actively use, the feedback relative to the relevant horizon (Figure 1).

Which initiatives are taken to support the students in giving useful peer feedback?

We saw in the Science project example that students might be hesitant to be overly critical. The presence of a teacher who would later carry out the final summative assessment, may lead students to show solidarity and attempt to disguise aspects of a text that needs work by viewing it too optimistically and uncritically.

If feedback is given only once, the training of the feedback process may have to be by direct instruction i.e., the teacher explicitly states the goals, criteria, and steps of feedback processes, and then the students implement the instructions into their work (as seen in the Microbiology example). But inquiry-based methods, i.e., learning by reflection in practice, are more likely to stimulate deep learning (Biggs, 1988; Dolmans et al., 2016) and allow the students themselves to explore and experience how to provide feedback in a relevant manner. An example of this is seen in Didactics where, after the first feedback session in groups, the students discuss the feedback that they have received and are asked to identify and select examples of feedback formulation that were useful for them. These formulations are collected for the whole class, which is then tasked with identifying what characterizes these examples, leading to a common understanding of what constitutes useful feedback. This approach typically requires iterative feedback processes to help students learn from their previous experiences, and thus provide multiple opportunities for closing the gap towards desired performance.

Which initiatives are taken to support the students in using and reflecting on the feedback from a learning goals perspective?

For feedback to be effective, it must be used by the receiver (see Discussion). In order to help students use and reflect on the feedback they receive, there are at least two central aspects to consider: 1) to be useful, feedback must help students in their work in creating their product (or future products), and 2) feedback must be meaningful in relation to how this product is evaluated (often in summative assessment). This entails that meaningful feedback should not be provided in response to a final product as well as meaning that feedback must be aligned with the criteria that are used to assess the final product (Zhang et al., 2017). Thus, the relationship between peer feedback and learning objectives, activities, and assessment have to be well aligned so that the peer feedback is not an isolated course activity. For instance, having peer feedback that will enable students to prepare better for an exam by improving a portfolio used in the exam, is more likely to be used by students than feedback aimed at competences which will not be assessed or put to later use. Thus, criterion-based assessment employed in the exam should relate to the assessment criteria employed in the peer feedback activities. This is the case in most of the peer feedback examples considered. For example, in the Teachers example, the products that form the basis for peer feedback can be transformed further by the participants prior to delivering the final teaching portfolio to be assessed summatively. Similarly, in the Science projects example, the midway and end-term evaluations will help and give incentives to the group to improve their work for the exam based on the same criteria used in the exam. In Didactics the students must, in the subsequent assignment, write how they used the feedback on the previous assignment.

How is feedback embedded in the teaching and learning?

Embedding of the peer feedback activities ranged from a voluntary add-on to a course (Microbiology) to restructuring the whole course to be planned around feedback activities as the central structuring mechanism, as in the Physics course and, to a lesser degree, in the Didactics course. If peer feedback is given at the end of the course, it presents a challenge, in terms of student motivation, to use the feedback. Therefore, when embedding peer feedback in TLAs, there is a need for follow up activities.

DISCUSSION

The landscape of elements in peer feedback that we chart in the themes above can be synthesized by an illustration of a feedback loop as an engineer or biochemist would understand it (Figure 2). Here, a system’s output is measured against a (set of) reference variables and a feedback signal is provided to the system to adjust the subsequent outputs. Likewise, in peer feedback practices, the student’s output (see examples in section A) can be assessed against a set of assessment criteria (see section C), and the feedback-giver provides the feedback to the student who will use this to revise and further develop their work or to inform future work (i.e., horizons; Section B). This whole process of giving and receiving feedback must be supported and embedded (Section D). This model of feedback may also be seen as a version of the formative assessment loops advocated by Harlen (2013) and Dolin et al. (2018), which stress the importance of identifying the subsequent steps in learning and how to walk these steps, i.e. that the learning potential of feedback is only fully realized when the students engage with the feedback (Hattie, 2009; Nash & Winstone, 2017).

The crucial point to keep in mind is that the learner must use the provided feedback before we can designate it as a feedback loop. Therefore, in our opinion, the term feedback should cover only these types of practices.

Figure 2. A model showing feedback as a process in which the effect or output of an action is returned (“fed-back”) to modify the next action (or the process). Such feed-back loops are essential to regulatory mechanisms in biology and in many process control systems

Used in this sense, all feedback is formative, i.e., if it is not formative, it is not feedback. However, feedback will often be given, perceived as, and indeed desired to be summative, since staff, students and the whole education system are highly accustomed to focusing on summative assessment rather than formative. Furthermore, Boud and Molloy (2013) point out that such a model of feedback, based on the engineering origin of the term, may tend to place the students in a passive role. However, as shown above, when implementing feedback activities as peer feedback, students can take much more active part in all parts of the cycle shown in Figure 2. As our examples show, when the students are invited to negotiate criteria, their role can include providing the framework for the assignment and thus partake in the responsibility for defining what is central to the topic at hand.

In terms of the didactical contract, the students must accept a new role as assessors, and teachers must support them in this as well as in incorporating received feedback into future work and practices. It is worth considering that in group-based settings, the peer feedback processes are likely to be active also before the delivery of the product, for example, by group members providing peer feedback on the drafts of each other. Thus, the amount of peer feedback received by a student is likely to be higher in group-based product situations.

The quality of peer feedback will, however, be affected by the students’ ability to engage in feedback giving. Hanrahan and Isaacs (2001) note that students perceive themselves positively and negatively during feedback since they may consider themselves to be too rude, too kind and polite, as well as not professional, thorough or critical enough. Students may also find themselves to be too focused on irrelevant or circumstantial aspects and so forth to be trusted to give each other feedback (Nilson, 2002, 2003). A more structured peer feedback dialogue might mitigate such effects. However, removing the structural cause or motive for this behavior (e.g., the presence of an examiner during peer feedback dialogues) might also do the same. Nilson (2002, 2003), indeed, argues that, rather than having the issue of what students are or what they can do, it is an issue of framing. For instance, not asking students to evaluate but instead phrasing questions that prompt the feedback giver to react to the work, to direct attention to the work, and keep attention to details. “List below the main points of the paper” and “what do you find most compelling?” are two examples of such prompting (Nilson, 2002, p. 3). One important, and sometimes overlooked aspect, is having the receiver of feedback to learn how to identify and ask for the feedback that they need (Haas, 2011), so that they learn to set up and communicate criteria for how they wish their work to develop (see section C). Conducting discourse regarding the use and engagement with feedback can be an activity that allows students to interrogate what it means to learn in a discipline and to take ownership of their own learning in the context of a given course (Blair & McGinty, 2013). Ways of supporting this are shown in the examples above.

As shown in the examples, the feedback principle of clarifying what good performance is (Nicol & MacFarlane, 2006) can be difficult to support if there is a lack of clarity about the horizon of the feedback. This is also discussed by Reimann et al. (2019) who ask: How is the feedback horizon communicated to students? Is the horizon clear to both teachers and students? Does the follow-up and/or the criteria clarify what the feedback is to be used for? That an assignment is, by no means, finished when it is submitted is also a big departure from the ordinary didactical contract; the renegotiation also includes accepting that assessment is not always summative.

The Microbiology example further illustrates both the interpretations of criteria and involvement of other stakeholders in defining them (see details in Jensen et al., 2020). The criteria should help the feedback-giver to focus on the central, most relevant or important, issues rather than either giving wall-to-wall feedback (see, e.g., Ellegaard et al., 2018, and references therein) and/or focusing on elements that are not very important for the task at hand (as mentioned previously for Science projects).

In conclusion, these six examples illustrate how the feedback model in figure 2 plays out in different practice settings, and how placing the students as both receivers and givers of feedback, with active roles in many parts of the feedback loop can also support students to be drivers of their own learning processes.

ACKNOWLEDGEMENTS

This work was funded by Nordplus Higher Education Programme grant no. NPHE-2018/10364. We would also like to thank Mr Aaron Peltoniemi for the proofreading and providing valuable peer feedback on our manuscript. We have no conflicting interests regarding this study.

REFERENCES

- Biggs, J. (1988). The role of metacognition in enhancing learning. Australian Journal of Education, 32(2), 127–138.

- Black, P., Harrison, C., Lee, C., Marshall, B., & Wiliam, D. (2004). Working inside the black box: Assessment for learning in the classroom. Phi Delta Kappan, 86(1), 9–21.

- Blair, A., & McGinty, S. (2013). Feedback-dialogues: Exploring the student perspective. Assessment & Evaluation in Higher Education, 38(4), 466–476.

- Boud, D., & Molloy, E. (2013). Rethinking models of feedback for learning: The challenge of design. Assessment & Evaluation in Higher Education, 38(6), 698–712.

- Brousseau, G. (1997). Theory of didactical situations in mathematics (N. Balacheff, M. Cooper, R. Sutherland & V. Warfield, Eds. and Trans.). Springer.

- Cate, O. T., & Durning, S. (2007). Peer teaching in medical education: Twelve reasons to move from theory to practice. Medical Teacher, 29(6), 591–599.

- Cornell, P. (2002). The impact of changes in teaching and learning on furniture and the learning environment. New Directions for Teaching and Learning, 92, 33–42.

- Dochy, F., Segers, M., & Sluijsmans, D. (1999). The use of self-, peer and co-assessment in higher education: A review. Studies in Higher Education, 24(3), 331–350.

- Dolin, J., Black, P., Harlen, W., & Tiberghien, A. (2018). Exploring relations between summative and formative assessment. In J. Dolin & R. Evans (Eds.), Transforming assessment: Through interplay between practice, research, and policy (pp. 53–80). Springer. https://doi.org/10.1007/978-3-319-63248-3_3

- Dolmans, D. H., Loyens, S. M., Marcq, H., & Gijbels, D. (2016). Deep and surface learning in problem-based learning: A review of the literature. Advances in Health Sciences Education, 21(5), 1087–1112.

- Doyle, E., Buckley, P., & Whelan, J. (2019). Assessment co-creation: An exploratory analysis of opportunities and challenges based on student and instructor perspectives. Teaching in Higher Education, 24, 739–754.

- Ellegaard, M., Damsgaard, L., Bruun, J., & Johannsen B. F. (2018). Patterns in the form of formative feedback and student response. Assessment and Evaluation in Higher Education, 43(5), 727–744.

- Gielen, S., Dochy, F., & Onghena, P. (2011). An inventory of peer assessment diversity. Assessment & Evaluation in Higher Education, 36(2), 137–155. https://doi.org/10.1080/02602930903221444

- Haas, S. (2011). A writer development group for master’s students: Procedures and benefits. Journal of Academic Writing, 1(1), 88–99.

- Hanrahan, S. J., & Isaacs, G. (2001). Assessing self-and peer-assessment: The students’ views. Higher Education Research & Development, 20(1), 53–70.

- Harlen, W. (2013). Assessment & inquiry-based science education: Issues in policy and practice. Global Network of Science Academies (IAP) Science Education Programme (SEP).

- Hattie, J., & Timperley, H. (2007). The power of feedback. Review of Educational Research, 77(1), 81–112.

- Hattie, J. A. C. (2009). Visible learning: A synthesis of 800+ meta-analyses on achievement. Routledge.

- Hvass, H., & Heger, S. (2018). Brugbar peer feedback: Instruktion og træning, før de studerende selv skal give og modtage [Useful peer feedback: Instruction and practice, before the students themselves give and receive]. Dansk Universitetspædagogisk Tidsskrift, 13(25), 59–70.

- Hämäläinen, R., Kiili, C., & Smith, B. E. (2017). Orchestrating 21st century learning in higher education: A perspective on student voice. British Journal of Educational Technology, 48, 1106–1118. https://doi.org/10.1111/bjet.12533

- Jensen, L. M., Burmølle, M., Johannsen, B. F., Bruun, J., & Ellegaard, M. (2020). Jagten på den gode opgave: Identifikation af kriterier og implementering af peer feedback i praksis [Looking for the good assignment: Identifying criterias and implementing peer feedback]. Dansk Universitetspædagogisk Tidsskrift, 16(28), 16–30.

- Koskinen, P., & Lämsä, J. (2019). Ruotiminen: toimintamalli harjoitustehtävien läpikäyntiin [Solve-Correct-Assess-Negotiate (SCAN): A method for assessing problem solving]. Yliopistopedagogiikka, 26(2), 52–55.

- Koskinen, P., Lämsä, J., Maunuksela, J., Hämäläinen, R., & Viiri, J. (2018). Primetime learning: Collaborative and technology-enhanced studying with genuine teacher presence. International Journal of STEM Education, 5(20), 1–13.

- Lizzio, A., & Wilson, K. (2008). Feedback on assessment: Students’ perceptions of quality and effectiveness. Assessment & Evaluation in Higher Education, 33(3), 263–275.

- May, M., Neutzsky-Wolff, C., Rosthøj, S., Harker-Shuch, I., Chuang, V., Bregnhøj, H., & Henriksen, C. B. (2016). A pedagogical design pattern framework-for sharing experiences and enhancing communities of practice within online and blended learning. Læring og Medier, 16.

- Nash, R., & Winstone, N. E. (2017). Responsibility-sharing in the giving and receiving of assessment feedback. Frontiers in Psychology, 8, 250.

- Nicol, D. J., & Macfarlane, D. (2006). Formative assessment and self-regulated learning: A model and seven principles of good feedback practice. Studies in Higher Education, 31(2), 199–218.

- Nicol, D., Thomson, A., & Breslin C. (2014). Rethinking feedback practices in higher education: A peer review perspective. Assessment in Higher Education, 39(1), 102–122.

- Nilson, L. B. (2002). Helping students help each other: Making peer feedback more valuable. Toward the Best in the Academy, 14(8), 2–3.

- Nilson, L. B. (2003). Improving student peer feedback. College Teaching, 51(1), 34–38.

- Reimann, N., Sadler, I., & Sambell, K. (2019). What’s in a word? Practices associated with ‘feedforward’ in higher education. Assessment & Evaluation in Higher Education, 44(8), 1279–1290.

- Sadler, D. R. (1987). Specifying and promulgating achievement standards. Oxford Review of Education, 13(2), 191–209.

- Sadler, R. (1989). Formative assessment and the design of instructional systems. Instructional Science, 18, 119–144.

- Shulman, L. S. (1993). Teaching as community property: Putting an end to pedagogical solitude. Change, 25(6), 6–7.

- Topping, K. (1998). Peer assessment between students in colleges and universities. Review of Educational Research, 68(3), 49–276.

- Verscheure, I., & Amade-Escot, C. (2007). The gendered construction of physical education content as the result of the differentiated didactic contract. Physical Education and Sport Pedagogy, 12(3), 245–272.

- Wiliam, D. (2011). Embedded formative assessment. Solution Tree Press.

- Winstone, N. E., Nash, R. A., Rowntree, J., & Parker, M. (2017). ‘It’d be useful, but I wouldn’t use it’: Barriers to university students’ feedback seeking and recipience. Studies in Higher Education 42(11), 2026–2041.

- Winstone, N., Boud, D., Dawson, P., & Heron, M. (2021). From feedback-as-information to feedback-as-process: A linguistic analysis of the feedback literature. Assessment & Evaluation in Higher Education. https://doi.org/10.1080/02602938.2021.1902467

- Zhang, F., Schunn, C. D., & Baikadi, A. (2017). Charting the routes to revision: An interplay of writing goals, peer comments, and self-reflections from peer reviews. Instructional Science, 45(5), 679–707.

- Zhu, W. (1995). Effects of training for peer response on students’ comments and interaction. Written Communication, 12(4), 492–528.

SUPPLEMENTARY INFORMATION 1 – THE TEMPLATE IN TABLE 2 FILLED OUT FOR EACH OF THE SIX EXAMPLES

Example 1. Didactics: The courseFundamentals of didactics for the natural science and mathematics, University of Copenhagen, UCPH, Denmark

| A. Framework: | |

|---|---|

| Describe the educational level and the context | University, bachelor, course with ca. 100 students from different degrees. Aimed at teaching career. The students work on five theme reports in groups of 3–5. |

| What is the setup for the peer feedback activity? (timeline, artifacts, location, technical support systems, anonymous/non-anonymous, group/individual, oral/written, mandatory/voluntary) | The peer feedback activities outlined here are written feedback on written report and are carried out four times. The product is made by a group of students, the feedback is given by individual students. Thus, each report receives 3–5 sets of feedback and giving feedback is mandatory. The process takes place in the platform PeerGrade. The feedback must be given within a few days of the assignment deadline and is followed up by class sessions for discussing the feedback activity, criteria and content. The feedback giver is anonymous. |

| Description of products on which students will provide peer feedback | The students deliver five theme assignments, 5 written pages, responses to set questions/tasks, essay. |

| How many times will students provide peer feedback on the same or successive products? | Four times, on successive products (for one report, feedback is given by the teacher). |

| B. Purpose: | |

| Which educational problem or problems does the peer feedback practice address? | To give the students opportunity to practice giving feedback and to engage the students even deeper into the learning goals of the course. |

| What are the intended learning objectives? | The intended knowledge is three-fold:

Content: Stimulating discussion of the content goals of the current product (this is supported by class discussions). Format: Use of references, presentation and strength of argument, structure of assignment, use of learning theory. Meta: Learning to give formative feedback. |

| To which product or practice does the feedback point? |

|

| C. Criteria: | |

| What are the criteria which students are to use when giving feedback? | Three types of criteria are used (and strengths and weaknesses of each approach is later discussed with the students):

|

| By which process are criteria developed? | They are developed in an iterative process by the teaching-team and discussed with the students after the peer feedback activity. |

| How are students involved in interpreting/developing the criteria? | The criteria are discussed in class with the students, and adjusted if needed. |

| How are the criteria presented to students? | On-line in PeerGrade when the peer feedback assignment is opened (after the product is delivered). |

| D. Support and embedding: | |

| Which initiatives are taken to support the students in giving useful peer feedback? | Presentation of reasons for giving feedback.

Discussions in class after the first round of peer feedback: What characterized the feedback that you found useful, leading to identification of general characteristics of useful feedback. Reflection on received feedback in the final report. |

| Which initiatives are taken to support the students in using/reflecting the received feedback from the viewpoint of the learning goals? | Discussions with the groups of students about how they use the feedback.

Requirement that the students indicate in the next assignment how the feedback was used. |

| How is feedback embedded in the teaching and learning? | The feedback activity is recurring, formalized, mandatory, and supported by other activities. |

Example 2. Physics: Undergraduate Physics course, University of Jyväskylä (JYU), Finland

| A. Framework: | |

|---|---|

| Describe the educational level and the context | Undergraduate (1st-3rd year) students of natural sciences, mostly physics majors. |

| What is the setup for the peer feedback activity? (timeline, artifacts, location, technical support systems, anonymous/non-anonymous, group/individual, oral/written, mandatory/voluntary) | The peer feedback activities outlined here are written feedback on written solutions on quantitative physics problems, which are carried out seven times in a course. Both the solutions of the problems and the feedback on the solutions are given by individual students. Each student gives feedback on one set of problems, so each student also receives one set of feedback. The process takes place in TIM (The Interactive Material) environment (https://tim.jyu.fi). The feedback is given within a few days after the submission deadline of the problem set. After that, a group of five students discuss with a teacher about the problems, their solutions, and feedback given for the solutions. The feedback is given anonymously. |

| Description of products on which students will provide peer feedback | Weekly quantitative physics problems (4–6 problems per week) which students independently solve. |

| How many times will students provide peer feedback on the same or successive products? | Once on each of seven products. |

| B. Purpose: | |

| Which educational problem or problems does the peer feedback practice address? | Facing possible misconceptions, seeing multiple approaches, grading the papers. |

| What are the intended learning objectives? | The intended knowledge is three-fold:

|

| To which product or practice does the feedback point? | Solutions on weekly quantitative physics problems: Students practice implementing their procedural knowledge into executable problem-solving activities and giving feedback on other ways of performing similar problem-solving activities. |

| C. Criteria: | |

| What are the criteria which students are to use when giving feedback? | We wanted the criteria guide students to focus more on the physics principles and assumptions of the problems, not mere “mechanical calculations.” |

| By which process are criteria developed? | The criteria were developed by a group of teachers in an iterative process. |

| How are students involved in interpreting/developing the criteria? | The criteria were clarified based on feedback from students. |

| How are the criteria presented to students? | Criteria are presented and discussed orally before the students hand in an assignment, and can be seen by students before they hand in an assignment. |

| D. Support and embedding: | |

| Which initiatives are taken to support the students in giving useful peer feedback? | Teachers discuss the aims of the peer feedback with students. The assessment rubric is available for students all the time. Examples of the peer feedback are provided for the students. |

| Which initiatives are taken to support the students in using/reflecting the received feedback from the viewpoint of the learning goals? | Practice – the same procedure is repeated weekly. Students receive weekly feedback from teachers in the meetings with a group of five students and a teacher. |

| How is feedback embedded in the teaching and learning? | The peer feedback practice is continuous and also part of the overall course assessment. |

Example 3. Teachers: Teacher development course, UCPH, Denmark

| A. Framework: | |

|---|---|

| Describe the educational level and the context | A teaching development course for assistant professors at the University of Copenhagen (i.e., postgraduate level). The course has a 1-year duration and a typical course size of 20–30 participants. The participants produce written products during the 8-day course and are requested to provide written feedback to peers prior to the following course day. Most assignments/projects are individual, except the first one which is group based. |

| What is the setup for the peer feedback activity? (timeline, artifacts, location, technical support systems, anonymous/non-anonymous, group/individual, oral/written, mandatory/voluntary) | The assignments are delivered in a learning management system (LMS) and are subjected to peer feedback by distribution manually or automatically. Usually, written feedback is provided on the LMS and is followed up by in class (group based) discussions on the course days. Feedback is not anonymous, as it is important for the participants to establish trust in the group, and anonymity may compromise this effort. It may also be hard to maintain anonymity in a small, fairly closely knit, group. Many of the problems described in the assignment involve quite personal aspects of one’s teaching practice. Teachers also provide feedback on selected assignments through a different medium. The first and the last assignment span several months of work. |

| Description of products on which students will provide peer feedback | The type of product varies from brief 1-page descriptions of (aspects) of teaching practice to lengthier analyses of course alignment (3–5 pages), and an intervention study (5–10 pages). |

| How many times will students provide peer feedback on the same or successive products? | 5 times on written products, 2–3 times on presentations (follow-up discussions in class, where learners have considered the received feedback on their assignment). |

| B. Purpose: | |

| Which educational problem or problems does the peer feedback practice address? | University staff should engage in “scholarship of teaching and learning” activities to improve learning and strengthening the role of teaching vs. research. Teachers are expected to be able reflect on their own teaching practice and the teaching practices of others to improve own teaching practice and to document such improvement. |

| What are the intended learning objectives? | A central objective is to get participants to reflect on developing teaching practice based on written artifacts and collegial presentations. The objectives include also expanding one’s repertoire of teaching methods, developing participants’ approaches to teaching, improving self-efficacy, and one’s ability to document development through a teaching portfolio. |

| To which product or practice does the feedback point? | The practice is aimed at STEM higher education teaching practice. |

| C. Criteria: | |

| What are the criteria which students are to use when giving feedback? | The criteria for the assignments reflect the themes of the course (e.g., “Student learning,” “Assessment,” etc.) and require that the participants explore an aspect of the theme in relation to their own teaching practices. |

| By which process are criteria developed? | By reference of the addressed theme to the participant’s own teaching practice. |

| How are students involved in interpreting/developing the criteria? | By relating the general theme to (an aspect of) the participant’s own teaching practice. |

| How are the criteria presented to students? | Assessment criteria for the feedback are presented with the peer feedback task in the employed LMS system. |

| D. Support and embedding: | |

| Which initiatives are taken to support the students in giving useful peer feedback? | There are exercises in collegial supervision in general before the peer assessment tasks are given, but little else. |

| Which initiatives are taken to support the students in using/reflecting the received feedback from the viewpoint of the learning goals? | The products created during the course can be used in the final teaching portfolio delivery, subjected to the changes the participants have made as result of the feedback. The portfolio will be used by the two supervisors to make a (summative) statement about the participant’s teaching qualifications (along with other material). There is also an option for participants to deliver their final development project for publication, after it has been revised based on the feedback they have received from peers. |

| How is feedback embedded in the teaching and learning? | Collegial development of teaching is an integrated part of the course objectives. |

Example 4. Microbiology: Undergraduate course in Microbiology, UCPH, Denmark

| A. Framework: | |

|---|---|

| Describe the educational level and the context | Undergraduate biology students (ca. 200). |

| What is the setup for the peer feedback activity? (timeline, artifacts, location, technical support systems, anonymous/non-anonymous, group/individual, oral/written, mandatory/voluntary) | The peer feedback activity was a pilot project (described in Jensen et al., 2020) and participation was therefore voluntary. The LMS Canvas was used to facilitate, and the format was written feedback on a written product. Each participant gave feedback on two other essays on the same topic as their own. Both giver and receiver are anonymous. |

| Description of products on which students will provide peer feedback | A draft for a mandatory individual 5-page essay which forms part of the course grade. |

| How many times will students provide peer feedback on the same or successive products? | Once. |

| B. Purpose: | |

| Which educational problem or problems does the peer feedback practice address? | It provides formative feedback (which is called for in student evaluations) on the essay, which is not possible for the teachers due to the large class size. |

| What are the intended learning objectives? | To improve the final essay with regard to both content and form. |

| To which product or practice does the feedback point? | To the final version of the essay (and thereby also the final grade for the course).

With regard to structure: to future essays. |

| C. Criteria: | |

| What are the criteria which students are to use when giving feedback? | Seven generic criteria (e.g., structure, references, argumentation).

Two content-specific criteria (separate for each of 10 different topics). |

| By which process are criteria developed? | By interviews with all grading teachers about what they consider characterizes the “good report,” by interview with the course responsible, and by the course learning goals (see also Jensen et al., 2020). |

| How are students involved in interpreting/developing the criteria? | They were not involved in this. |

| How are the criteria presented to students? | All students had access to the criteria on-line whether or not they participated in the feedback project. |

| D. Support and embedding: | |

| Which initiatives are taken to support the students in giving useful peer feedback? | Before the feedback activity, the students were invited to: 1) A presentation about giving feedback and 2) A workshop to support the students in giving feedback, which took place during the feedback activity. |

| Which initiatives are taken to support the students in using/reflecting the received feedback from the viewpoint of the learning goals? | The students could contact the coordinator by email during the feedback activity. |

| How is feedback embedded in the teaching and learning? | This is a voluntary offer to the students. |

Example 5. Urban development: Engineering course: Low residential construction – from building program to outline proposals, University of Southern Denmark (SDU), Denmark

| A. Framework: | |

|---|---|

| Describe the educational level and the context | Interdisciplinary course with a theme project at 1st semester bachelor program for civil engineers with 80 students. The theme is land development in urban areas. The students work on the project in randomized groups of 6–7. |

| What is the setup for the peer feedback activity? (timeline, artifacts, location, technical support systems, anonymous/non-anonymous, group/individual, oral/written, mandatory/voluntary) | The peer feedback activity is divided into 5 steps in the first 3 weeks of the semester where the students haven’t tried peer feedback before.

The first step is a teacher-controlled part in the class to develop the criteria for peer feedback. The next 2 steps of the peer feedback activity are written student-to-student feedback within the group (2&2) on a teacher-predefined form called quality assurance document. The next step is a teacher-controlled poster session in the classroom where the drawings is presented on the walls. Each group is an opponent group for all the other groups. One member of the group stands next to the drawing, the rest circulates the classroom looking at the other groups drawings, writing comments and talking to the group member. This way, each group receives both written and oral peer feedback. In the last step the students embed the feedback in their groupwork room. The peer feedback activities are all voluntary. But if a group isn’t presenting a drawing, they are not allowed in the classroom during posters session. |

| Description of products on which students will provide peer feedback | The students design and print a technical drawing to the poster session. The improved drawing will be a part of the finally written group report. |

| How many times will students provide peer feedback on the same or successive products? | The peer feedback activity runs twice on 1st semester and several times later in the same student’s program. |

| B. Purpose: | |

| Which educational problem or problems does the peer feedback practice address? | It provides specific formative feedback on every group’s drawings, which is not possible for the teacher due to the large class size. It prepares students for the workflow in their future profession. |

| What are the intended learning objectives? | The learning goals are:

Learning to reflect on and apply their knowledge. Learning to give and use formative feedback. To be inspired by other students work. To have students learn to see the direct benefit of attending to feedback sessions. |

| To which product or practice does the feedback point? | To make the students drawings even better both now and with future drawings within the same topic. |

| C. Criteria: | |

| What are the criteria which students are to use when giving feedback? | A good example of a drawing and a checklist written in class with input from the students. |

| By which process are criteria developed? | The student looks at both good and bad examples in corporation with the teacher and each other to ascertain what a good drawing should contain. |

| How are students involved in interpreting/developing the criteria? | See previous. |

| How are the criteria presented to students? | They have access to the checklist on the intranet. |

| D. Support and embedding: | |

| Which initiatives are taken to support the students in giving useful peer feedback? | Creating an environment where the student is confident in presenting their own work. Discussing in class what formative feedback is. |

| Which initiatives are taken to support the students in using/reflecting the received feedback from the viewpoint of the learning goals? | The part with student-to-student feedback within the group is written in the quality assurance document containing a column on the left side for the given feedback and a column on the right side for reflections from the receiver of feedback.

After the poster session, the teacher provides an oral follow-up, and then the groups decode and discuss their given comments. In the future, they need to point out at least 3 topics they agreed to improve due to the learning goals. |

| How is feedback embedded in the teaching and learning? | The peer feedback practice is continuous and a part of every course throughout the Civil Engineering program. Since the students learn to give and receive/implement useful feedback, it leads to better assignments which eases the teacher’s evaluation. Its incorporation into course- and project description is in progress. |

Example 6. Science projects, University of Roskilde (RUC), Denmark

| A. Framework: | |

|---|---|

| Describe the educational level and the context | The science students (around 100 students/year) do three problem-oriented projects (15 ECTS each) in their first three semesters of their bachelor’s degree. The projects are done in groups of 2–7 students. Each group chooses a problem to work on based on their interests, but the projects are required to illustrate a particular theme of the semester (e.g., Science and Society). A teacher is designated to coordinate the projects among these 100 students and, in particular, introduce various aspects of project work to them. |

| What is the setup for the peer feedback activity? (timeline, artifacts, location, technical support systems, anonymous/non-anonymous, group/individual, oral/written, mandatory/voluntary) | For each group, an opponent group and supervisor are selected. The opponent and the project groups give peer feedback to each other twice during the semester, at midterm and final evaluations, based on written reports. The feedback is oral (but the students can choose to provide written feedback as well), non-anonymous and given in sessions where the two groups meet. It is mandatory for all students to write reports for and participate in the sessions. |

| Description of products on which students will provide peer feedback | At the midterm evaluation, the product is a written midterm report that is written for the occasion. Here, the students are motivated about their project, and, in particular, the research question (problem formulation) as well as its relation to the theme of the semester as how it describes how they plan to answer the research question. At the final evaluation, the product is a near-finished research report. |

| How many times will students provide peer feedback on the same or successive products? | The students receive feedback twice during the semester. |

| B. Purpose: | |

| Which educational problem or problems does the peer feedback practice address? | How do we enable students to become better at doing project work and at giving feedback to their peers? |

| What are the intended learning objectives? | The learning goals are:

|

| To which product or practice does the feedback point? | The products are two written reports produced by the student group. |

| C. Criteria: | |

| What are the criteria which students are to use when giving feedback? | For the midterm evaluation, the criteria are: 1) Is the project problem-oriented? 2) Is the research question a (good) example of the semester theme? 3) Is there a realistic plan for how to answer the research question? For the final evaluation, the criteria are: 1) What are the strong and weak aspects of the project work done by the group, including the project report? 2) How can the report be improved prior to the final submission of the report at the exam. |

| By which process are criteria developed? | The criteria are fixed and are the same for the first three semesters. |

| How are students involved in interpreting/developing the criteria? | All science students discuss with the teacher in charge of coordinating on how the criteria are to be interpreted. This discussion is continued within the project group and the project supervisors. |

| How are the criteria presented to students? | The students are informed of the criteria by the teacher in charge of coordinating the projects as well as the project supervisor. |

| D. Support and embedding: | |

| Which initiatives are taken to support the students in giving useful peer feedback? | The teacher in charge of coordinating the projects introduce the rationale behind the criteria as well as discuss how to give useful peer feedback before the evaluations with the students. At the evaluations, the supervisors give the students feedback on their feedback. As the feedback setup and the criteria are the same in the three semesters, the students get more experience with the feedback activity. |

| Which initiatives are taken to support the students in using/reflecting the received feedback from the viewpoint of the learning goals? | The project supervisor and the opponent supervisor are present during the evaluations. The former subsequently discuss with the students who they can take the feedback into account to improve their project processes; this supports the learning goal of improving the ability to do projects and take feedback into account in the process. The two supervisors give feedback on the feedback as a support to learning to give feedback. |

| How is feedback embedded in the teaching and learning? | The peer feedback practice is continuous and a part of the first three semesters of the science bachelor program. Since the students learn to give and receive/implement useful feedback, it leads to better reports. |

SUPPLEMENTARY INFORMATION 2: Example (from Didactics) of a rubric with achievement levels to guide students’ feedback

| How are the learning objectives formulated and justified? | |

| i | The learning objectives do not use observable criteria (or action verbs), they are not placed within a taxonomy, or they are missing. |

| ii | The learning objectives partly use observable criteria (including action verbs), and their taxonomic position is justified across subjects. |

| iii | The learning objectives clearly use observable criteria (incl. action verbs), and different potential taxonomic positions are discussed and justified both within and across subjects. |

| iv | The learning objectives clearly use observable criteria (incl. action verbs) and examples are provided to show how they can be interpreted against a taxonomy. The learning objectives are motivated for both the discipline and across disciplines. |

| Justify your evaluation and provide formative feedback to help authors improve their work. |